Robot Localization using Object Tracking

This project attempts to model localization in robots as humans do while navigating in the real world. Humans use their semantic understanding of the environment to often track entire ‘objects of interest’ rather than relying only on features/keypoints (as done in most VIO/SLAM methods).

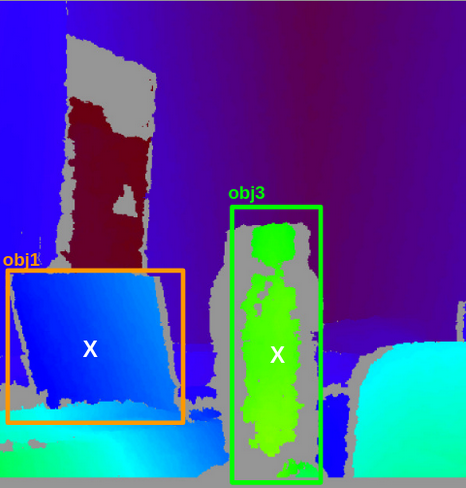

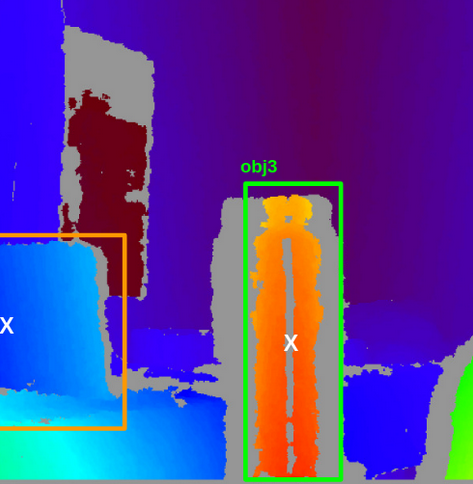

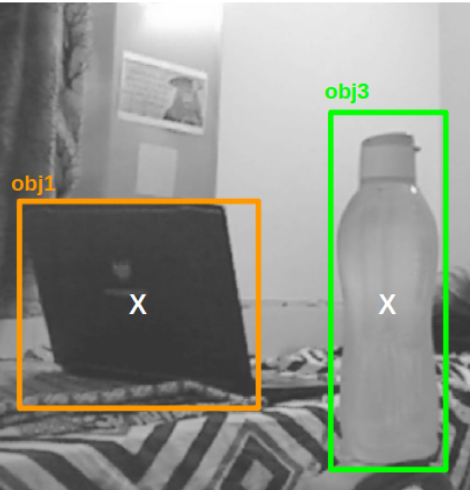

The focus of this project was exploring a new method based on navigation in humans, and not on developing a robust localization system. A calibrated Kinect was used for obtaining a depth map and a monochrome image. Objects of interest were detected in the images using YOLOv3 and their approximate 3D location is estimated. These objects were then tracked through the frames (without the use of features), to obtain a localization prior.

This project was done as a part of the Cognitive Neuroscience course in BITS Goa in Fall 2019, with the aim of incorporating ideas from the brain’s navigation methods in robotics.