Multispectral Drone-imagery for Agriculture

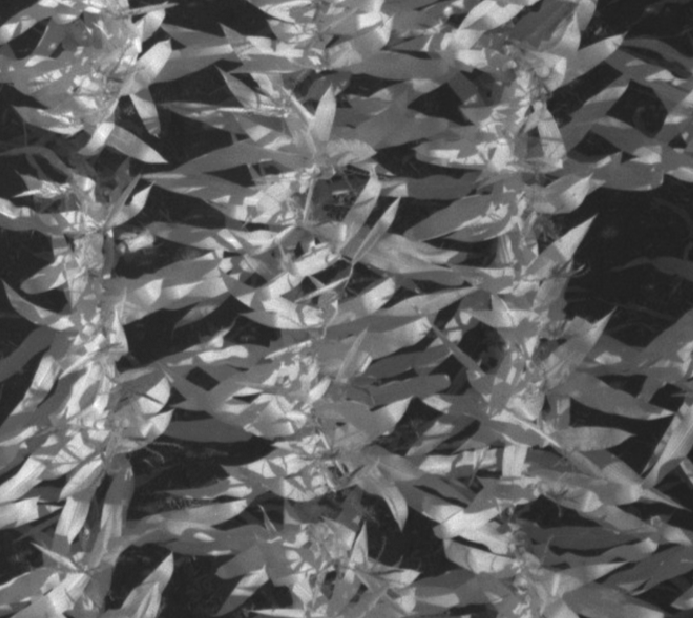

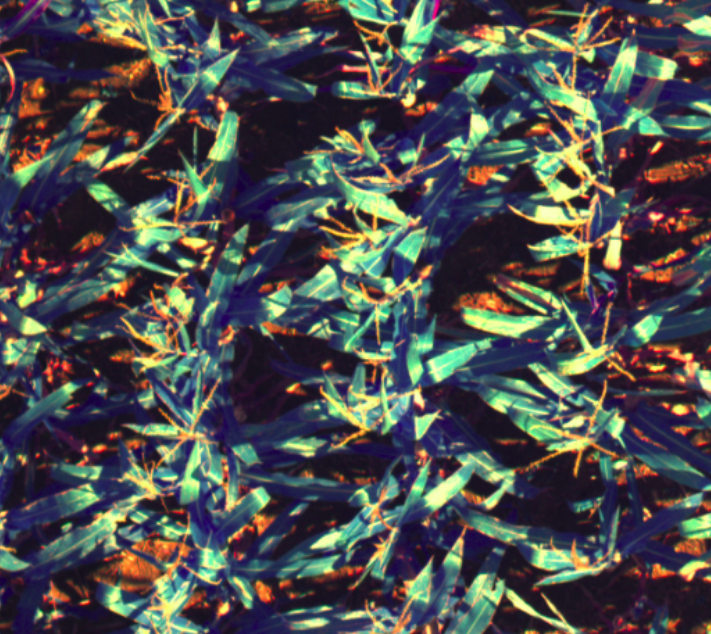

Precision agriculture often require images from different spectra in infrared & visual for gaining nutritional information about the crop. In this project, multispectral images of a field were taken by flying a drone over the field. A method for alignment of images from different spectra was developed.

Infrared images differ significantly from visual-spectrum images (in terms of texture, intensity gradients, etc), so standard feature-based computer vision methods cannot be used. The project used an iterative method for maximising ‘Mattes Mutual Information’ between visual and infrared images, for multi-channel registration. Additionally a method for estimating a prior transform between channels was developed, to move towards real-time performance.

This project was done in July 2020 as a short, month-long project with the Autonomous Robots Lab (University of Nevada, Reno), under Prof Kostas Alexis.